PUR513 – Securing Microsoft 365 Copilot and AI Data with Microsoft Purview

Table of Contents

ToggleIntroduction

AI is changing the way we work , and Microsoft 365 Copilot is leading that transformation.

But with great power comes a familiar question:

“How do we make sure AI doesn’t expose, misuse, or learn from sensitive data?”

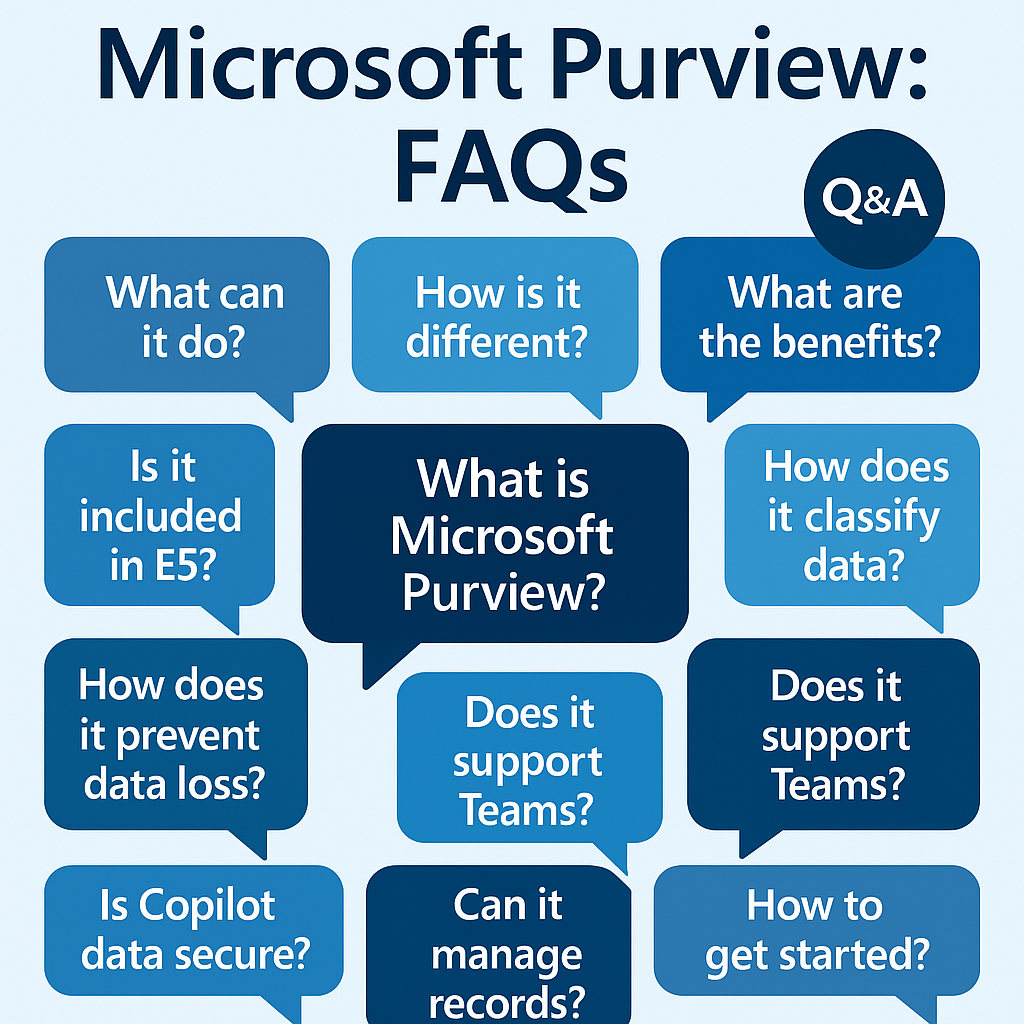

Enter Microsoft Purview for AI data security , the layer that ensures everything Copilot touches is governed, protected, and compliant.

In this article, we’ll explore how Microsoft Purview secures data flowing through Copilot and other AI-powered tools, without slowing innovation.

The AI Data Security Challenge

AI tools like Copilot access data across Microsoft 365 , emails, chats, documents, and meeting notes.

While this creates powerful insights, it also expands your data exposure surface.

Key risks include:

- Unintended data exposure: AI might summarize or reveal restricted information.

- Prompt injection: Users could feed sensitive data into AI models.

- Data persistence: Generated content might contain confidential details.

- Regulatory impact: AI outputs may be subject to GDPR, HIPAA, or financial data laws.

Microsoft Purview mitigates these challenges with built-in controls that extend from your existing data protection policies.

Microsoft’s Responsible AI Approach

Before diving into tools, it’s important to understand Microsoft’s Responsible AI principles, which underpin Copilot security:

- Fairness and accountability – AI should act ethically and transparently.

- Privacy and security by design – Data access is governed by Microsoft 365 permissions and tenant boundaries.

- Compliance alignment – Copilot inherits your Microsoft Purview configurations.

In short: Copilot doesn’t create new data risks; it reflects your existing data governance maturity.

How Copilot Interacts with Microsoft 365 Data

When a user prompts Copilot, it:

- Uses the user’s Microsoft Entra ID context to determine access.

- Retrieves data from sources like SharePoint, OneDrive, Exchange, Teams, and Loop , but only what the user already has permission to access.

- Generates a response using the Microsoft Graph and the Large Language Model (LLM) running in a secure Microsoft environment.

- Does not train the foundation model on your organizational data.

That means access control, encryption, labeling, and DLP policies still apply , Purview governs all of it.

How Microsoft Purview Protects AI Data

Purview extends its Information Protection, DLP, and Audit capabilities into AI-powered workflows.

| Purview Capability | AI Use Case | Example |

| Sensitivity Labels | Classify and protect files accessed by Copilot | “Confidential–Legal” documents remain encrypted and inaccessible to unauthorized users |

| Data Loss Prevention (DLP) | Monitor sensitive data submitted in prompts or generated responses | Block users from typing credit card numbers into Copilot |

| Insider Risk Management | Detect risky behavior involving AI | Alert when users paste confidential text into third-party AI tools |

| Audit (Premium) | Record all Copilot interactions | Log when and which data sources Copilot accessed |

| Information Protection Scanner | Discover unclassified data Copilot could access | Identify sensitive SharePoint or OneDrive content at risk |

These capabilities ensure that data protection follows the AI workflow, not just traditional human actions.

Copilot Activity Logging with Microsoft Purview Audit

Microsoft Purview Audit (Premium) now logs specific Copilot-related events, such as:

- CopilotInteraction , when a user engages with Copilot.

- CopilotDataAccessed , which sources and files were referenced.

- CopilotSessionID , unique identifier for tracking user sessions.

These logs help compliance and security teams:

- Verify AI data access during audits.

- Investigate potential misuse.

- Prove responsible AI usage to regulators.

🧠 Tip: Use Power BI or Microsoft Sentinel to visualize Copilot interaction trends and identify high-risk patterns.

DLP for AI: Protecting Prompts and Responses

Copilot prompts and outputs are subject to Microsoft Purview DLP rules.

Example:

- A user tries to ask Copilot: “Summarize customer payment details from last quarter.”

- The prompt includes financial data matching a Sensitive Information Type (SIT).

- Purview DLP detects the match and can:

- Warn the user.

- Block the request.

- Log it for compliance review.

This helps prevent data exfiltration via AI prompts , a growing area of concern for enterprises adopting generative AI.

Extending Purview Controls to Third-Party AI Tools

For organizations using multiple AI systems, Defender for Cloud Apps (MCAS) complements Purview by monitoring external tools like ChatGPT, Gemini, or Claude.

You can:

- Detect when users upload corporate data to third-party AI sites.

- Apply DLP or block risky web sessions.

- Use Conditional Access App Control to restrict access.

Together, Purview and Defender create a unified AI governance perimeter, securing both internal and external AI activity.

Real-World Example: Protecting Legal Documents in Copilot

Scenario:

A global law firm uses Copilot to draft client summaries. Legal documents contain highly sensitive, labeled content.

Implementation:

- Apply “Confidential–Legal” sensitivity label with encryption and “View Only” permissions.

- Configure DLP to detect legal data types (case IDs, client names).

- Enable Copilot auditing for visibility into AI interactions.

- Review logs in Purview Audit and Compliance Manager for data flow analysis.

Outcome:

Copilot enhances productivity without exposing client data , and the firm can demonstrate compliance to regulators.

Compliance Manager and AI Readiness

Compliance Manager now includes AI-specific controls for frameworks like GDPR, ISO 42001 (AI Management), and SOC 2.

You can track improvement actions such as:

- “Enable audit logging for Copilot interactions.”

- “Apply sensitivity labels to AI-accessible repositories.”

- “Establish user education on AI data handling.”

This creates measurable AI governance maturity , critical for risk assessments and certification.

Real-World Tip

Treat AI as another data consumer , not a new system.

The same Purview controls that protect users protect AI too.

Focus on consistent labeling, DLP, and auditing , not reinventing governance just for AI.

Exam Tip (SC-401)

Expect questions about:

- How Purview protects AI-generated or AI-accessed data.

- What Copilot activity is logged in Audit (Premium).

- How DLP prevents sensitive prompts.

- Integration with Defender for Cloud Apps for non-Microsoft AI tools.

Example:

A company wants to prevent users from submitting credit card data into Microsoft 365 Copilot. Which Purview capability achieves this?

Answer: Data Loss Prevention (DLP) for AI prompts.

Best Practices for AI Data Security

✅ Extend your existing labels and DLP policies to all Copilot-accessible repositories.

✅ Turn on Audit (Premium) for AI activity tracking.

✅ Use Compliance Manager’s AI readiness assessments.

✅ Integrate Defender for Cloud Apps for third-party AI visibility.

✅ Educate users: “Copilot respects your permissions , but you must respect the data.”

The Future: Data Security Posture Management (DSPM) for AI

Microsoft Purview is evolving into Data Security Posture Management (DSPM) , offering continuous visibility into where sensitive data lives, who can access it, and how AI interacts with it.

Upcoming DSPM capabilities include:

- Risk-based insights for Copilot data exposure.

- Mapping sensitivity labels to AI prompts and outputs.

- Automatic remediation of risky data access.

The next frontier of Purview is AI-aware governance , proactive, automated, and adaptive.

Conclusion

Microsoft 365 Copilot doesn’t weaken your security , it amplifies it when paired with Microsoft Purview.

With labeling, DLP, auditing, and compliance frameworks all integrated, you can innovate responsibly while maintaining full control over your data.

Purview ensures that your organization’s leap into AI is secure, compliant, and trustworthy , by design.

In the next article, PUR514 – Integrating Purview with Defender XDR, Entra ID, and Power Platform, we’ll explore how Purview connects across the Microsoft security ecosystem to unify compliance, identity, and threat protection.

I am Yogeshkumar Patel, a Microsoft Certified Solution Architect and Enterprise Systems Manager with deep expertise across Dynamics 365 Finance & Supply Chain, Power Platform, Azure, and AI engineering. With over six years of experience, I have led enterprise-scale ERP implementations, AI-driven and agent-enabled automation initiatives, and secure cloud transformations that optimise business operations and decision-making. Holding a Master’s degree from the University of Bedfordshire, I specialise in integrating AI and agentic systems into core business processes streamlining supply chains, automating complex workflows, and enhancing insight-driven decisions through Power BI, orchestration frameworks, and governed AI architectures. Passionate about practical innovation and knowledge sharing, I created AIpowered365 to help businesses and professionals move beyond experimentation and adopt real-world, enterprise-ready AI and agent-driven solutions as part of their digital transformation journey. 📩 Let’s Connect: LinkedIn | Email 🚀

Post Comment